Ever wondered why some apps work fast while others slow down? The answer often lies in how well they use memory cache. Caching helps apps run smoother by keeping often-used data ready for quick access. As mobiles are now a big part of our lives, knowing how to cache well is key.

This knowledge helps make apps faster and more reliable. We’ll look into the details of caching on mobiles. You’ll learn important techniques and best practices to make apps better for users.

Introduction to Memory Caching in Mobile Environments

Memory caching is key to making web apps run better, especially on mobile devices. It stores data that’s often needed, so apps don’t have to keep asking for it. This makes apps faster and more enjoyable to use.

On mobiles, where space and speed are tight, caching is even more important. It helps apps work well even when the internet is slow. This keeps users happy and interested in what they’re doing online.

Understanding Cache Types

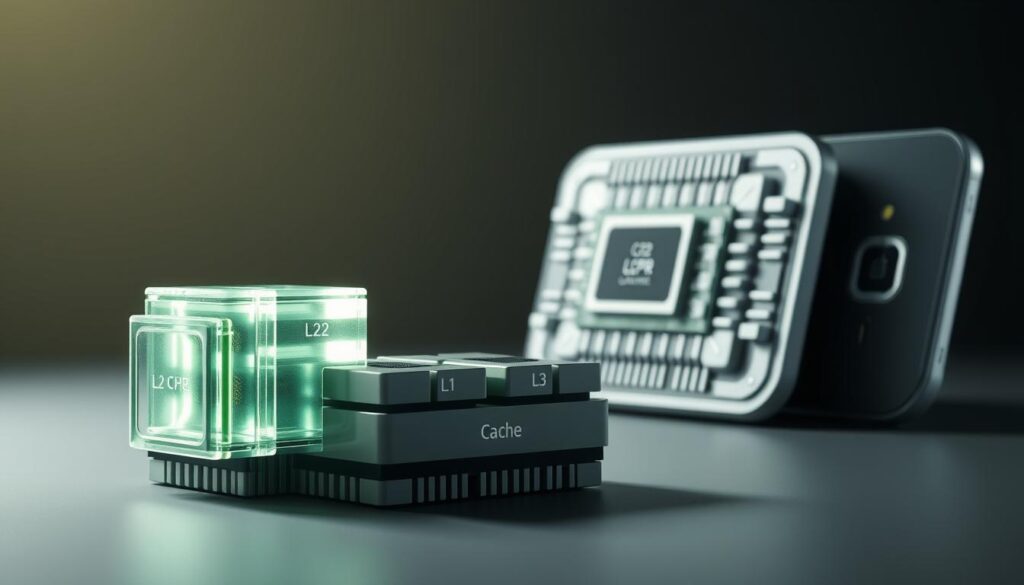

Memory caching is key for modern mobile apps, making them faster and better. There are different cache types for various needs. We’ll look at in-memory caching, distributed caching, and client-side caching. Each has its own strengths and when to use them.

In-Memory Caching

In-memory caching stores data in RAM for quick access. It’s great for apps that need fast data. This method cuts down on wait times, making apps run smoother.

Distributed Caching

Distributed caching puts data on many servers. It’s perfect for apps that grow a lot. It makes data fast to get and keeps it safe. It’s good for big apps with lots of users.

Client-Side Caching

Client-side caching saves data on your device. It makes loading static resources quicker. But, it needs careful handling to avoid old data. Finding the right balance is key for a great user experience.

Key Benefits of Caching for Mobile Applications

Caching is key for modern mobile apps, offering many benefits. It boosts user experience by making apps run smoother and faster. This is especially true for apps that need to handle a lot of data.

Improved Application Performance

Caching makes apps run better by storing data in memory. This means apps can get data quickly without asking the server too often. Users see faster app responses, making their experience better.

Apps need to be fast to keep users happy. Caching helps make sure apps are always ready with the data they need.

Reduced Server Load

Caching also helps servers by reducing the number of requests they get. This means servers work less hard, especially when lots of people are using the app. It saves money and makes apps more reliable.

This helps servers stay efficient and reliable. It’s a win-win for both users and businesses.

Cache Strategies for Mobile Environments

Using the right caching strategies makes mobile apps run better and faster. Each method has its own benefits and challenges. This lets developers pick the best one for their app’s needs. We’ll look at three main strategies: cache-aside, write-through, and read-through.

Cache-Aside

The cache-aside strategy checks the cache first before looking at the database. It gives developers more control over caching. If data isn’t in the cache, it gets fetched from the database and saved for later.

This way, only data that’s used a lot takes up space in the cache.

Write-Through

Write-through caching writes data to both the cache and database at the same time. This keeps data consistent across both places. But, it can slow down writes because data has to be updated in two places.

Still, it’s great for apps where keeping data accurate is very important.

Read-Through

Read-through caching uses the cache as the main source for data. It makes apps simpler because it only goes to the database when needed. This boosts performance and cuts down on database work.

| Caching Strategy | Advantages | Disadvantages |

|---|---|---|

| Cache-Aside | Flexible control over data caching, efficient for sporadic access. | Can lead to cache misses if data is not proactively stored. |

| Write-Through | Maintains data consistency between cache and database. | Potentially slower writes due to dual storage. |

| Read-Through | Improves retrieval speed and reduces database load. | Requires careful management of cache freshness. |

Optimize Memory Cache in Procedural Environments

Improving memory cache is key to better performance in procedural environments. Knowing how apps use memory helps developers improve caching. By choosing what to cache wisely, apps load faster for users.

Choosing the right cache size for each app is important. It makes sure resources are used well. Adjusting when data expires keeps the cache fresh and efficient. Checking hit rates helps teams see if caching is working well.

Keeping up with updates is crucial for ongoing performance. Regularly checking caching methods helps adapt to new demands. This focus on memory cache leads to a better user experience and more efficient apps.

Measuring Cache Effectiveness

Understanding cache effectiveness is key. It depends on metrics like the cache hit rate and the cache eviction rate. These metrics help developers improve performance and efficiency.

Cache Hit Rate Calculation

The cache hit rate shows how often requests are served from the cache. A high rate means the caching strategy is working well. It reduces latency and boosts user experience.

To calculate it, divide the number of cache hits by the total requests. For example, if there are 80 hits out of 100 requests, the rate is 80%. This helps determine if cache sizes need to be adjusted.

Analyzing Cache Eviction Rate

The cache eviction rate shows how often items are removed from the cache. A high rate can mean issues like too small cache sizes or bad caching strategies. It can lead to more latency as data is fetched from slower systems.

By watching the eviction rate, developers can spot problems. They can then adjust their caching strategies. This ensures the right balance between cache size and data relevance.

Real-Life Examples of Caching in Mobile Applications

Caching is key to making mobile apps run better. It helps developers make apps faster and more user-friendly. Looking at real examples shows how caching works well in e-commerce and mobile banking.

E-Commerce Applications

E-commerce sites use caching to speed up product info. This means users can quickly find what they need, especially during busy times. Sites like Amazon and eBay use this to handle lots of requests without slowing down.

Mobile Banking Applications

In mobile banking, caching is vital for quick access to account info. It makes sure users can get to their financial details fast. Apps like Chase and Bank of America use caching to offer fast and secure services.

Code Examples for Caching Strategies

Using caching in mobile apps can make them run faster. It cuts down on wait time and server work. Here, we share code examples for in-memory caching. These examples cover storing, getting, and managing data, helping developers boost app performance.

In-Memory Caching Implementation

In-memory caching stores data in the app’s memory for quick access. Below are some basic caching code examples:

// Simple key-value storage for in-memory caching

class Cache {

private Map cacheStore = new HashMap();

public void put(String key, Object value) {

cacheStore.put(key, value);

}

public Object get(String key) {

return cacheStore.get(key);

}

public void clear() {

cacheStore.clear();

}

}

// Example usage

Cache myCache = new Cache();

myCache.put("user123", userData);

Object cachedData = myCache.get("user123");

This code shows a basic cache setup. It lets developers store and get data easily. The put method adds data, and get retrieves it. Clearing the cache helps manage memory well.

For more complex needs, you might add cache eviction policies or type-specific caching. Here’s a comparison of features:

| Feature | Simple Cache | Advanced Cache |

|---|---|---|

| Key-Value Storage | Yes | Yes |

| TTL Support | No | Yes |

| Eviction Policies | No | LRU, LFU, etc. |

These examples show how in-memory caching can improve app performance. They help developers add caching to their apps for better speed.

Managing Cache Expiration Policies

Managing cache expiration policies is key to keeping data fresh in mobile apps. By setting the right expiration times, developers make sure users get the latest and most accurate info. This boosts user experience and app performance.

Choosing the right time for cache expiration is important. Developers need to study how often data changes and is accessed. This helps pick the best expiration times, balancing data refresh with cache management efficiency.

Using different cache expiration strategies can greatly improve data handling. Here’s a table showing various methods for managing cache expiration policies:

| Expiration Method | Description | Use Case |

|---|---|---|

| Time-Based Expiration | Data is automatically invalidated after a specific period. | Ideal for content that changes at predictable intervals. |

| Access-Based Expiration | Data expires based on the frequency of access, refreshing upon request. | Useful for frequently updated resources. |

| User-Triggered Expiration | Allows users to force a data refresh when necessary. | Applicable in applications where data accuracy is critical. |

Putting a focus on cache expiration policies is crucial for data integrity in apps. Tailored strategies meet user and app needs, ensuring data freshness and cache management efficiency.

Understanding Garbage Collection Effects on Cache

In mobile settings, how garbage collection and cache work together is key for good memory use. Garbage collection finds and gets rid of unused objects. This is very important in places like Android development, where memory is managed automatically.

When things get busy, garbage collection can get tricky. Lots of memory being used and then freed up can mess with cache work. This can make apps run slower. Making garbage collection faster helps keep important data easy to find.

Developers can make apps better by knowing how garbage collection and cache work together. They can do things like make cache entries smaller and manage memory better. This helps apps run smoother and use resources well.

Memory Sharing Techniques in Android

Memory sharing is key to better performance in Android apps. Developers use various Android techniques to improve app resource management. They share memory regions, letting apps access the same space without duplicating data.

The Binder IPC mechanism is a common method in Android. It helps share memory between processes. This reduces startup time and makes apps load faster, improving user experience.

Effective memory sharing boosts memory use. Developers can make the most of limited resources, even on devices with less hardware.

Implementing High Availability in Caching

High availability in caching is key for smooth app performance. To keep cache services up, companies use different redundancy plans. These include making cache copies on many nodes, so apps keep running even if one node fails.

A strong caching setup also helps a lot. It spreads the cache across various places, cutting down on delays and service stops. A good caching structure supports growth and keeps apps fast, even when there are problems.

For businesses that focus on user experience, high availability in caching is vital. By using smart redundancy and a solid caching structure, companies can keep their apps running smoothly. This helps avoid problems caused by server failures.

Best Practices for Caching in Procedural Environments

Using caching best practices in procedural environments can greatly improve mobile app performance. It’s important to monitor cache performance regularly. This helps spot any issues that might slow down the app.

It’s key to use the right data access patterns with cache systems. Matching these patterns with how data is accessed and updated boosts cache efficiency. Also, having data synchronization processes in place is crucial. This keeps data fresh and accurate, preventing outdated info.

Setting up a good cache expiration policy is another essential practice. This policy ensures that old data is updated, keeping the app fast and reliable. By following these caching best practices, you can make your app more efficient and user-friendly.

Challenges of Caching in Mobile Applications

Caching in mobile apps offers big benefits, but it comes with its own set of challenges. One big issue is keeping data up to date. When data changes often, the cached version can become old. This can make the app slow and frustrating for users who want the latest info.

Another problem is making sure data is fresh. It’s important to update data quickly without slowing down the app. If updates are slow, users might get old or wrong information. This can make them unhappy with the app.

Memory is also a big challenge. Mobile devices have less memory than computers. This means developers have to choose what data to keep cached. Finding the right balance between memory use and app speed is key.

Understanding these challenges is key for developers. By tackling these issues, they can make apps better for users. This ensures apps stay fast and reliable.

Conclusion

Optimizing memory caches in mobile apps is key to better performance. Developers use different caching strategies and keep improving. This makes apps run well and fast.

New caching technologies will make mobile apps even better. These advancements will improve how users experience apps on their phones. Developers will make apps that are faster and more user-friendly.

As mobile apps need to be faster, knowing about caching is crucial. It’s important for developers to learn from others and stay updated. They should check out guides and tutorials to keep up with the latest in caching.

FAQ

What is memory caching in mobile environments?

Memory caching in mobile environments stores data temporarily. It makes apps run faster and feel smoother. This is because it quickly gets data without always going back to the source.

What are the different types of caching relevant for mobile applications?

There are a few types of caching for mobile apps. In-memory caching uses RAM for fast access. Distributed caching spreads data across servers for more room. Client-side caching stores data on devices to speed up static content.

How does caching improve application performance?

Caching makes apps faster by cutting down on wait times. It also makes servers work less hard. This means data is always ready, making apps better and saving money when lots of people use them.

What caching strategies are effective for mobile applications?

Good caching strategies include Cache-Aside, Write-Through, and Read-Through. Cache-Aside checks the cache first. Write-Through keeps data in sync. Read-Through makes the cache the main source.

Why is it important to measure cache effectiveness?

Measuring cache success is key. It shows how well caching works by looking at hit rates and eviction rates. This helps developers tweak for the best results.

Can you provide examples of caching in real mobile applications?

Yes, e-commerce apps cache product info to speed up shopping. Mobile banking apps cache account data for quick access. This boosts security and keeps users engaged.

How do I manage cache expiration policies effectively?

Managing cache expiration means finding the right balance. It’s about keeping data fresh without wasting resources. This depends on how often users access data.

What is the impact of garbage collection on caching in managed environments?

Garbage collection can slow down caching. It affects memory use, especially when apps are busy. Proper management is crucial for efficient caching.

What techniques can enhance memory sharing in Android applications?

To share memory better in Android, use shared memory and managed processes. This makes apps start faster and use less memory, improving overall performance.

How can I ensure high availability in caching?

For high availability, use cache replication and distributed caching. This keeps apps running smoothly even when servers go down.

What are the common challenges of caching in mobile applications?

Caching challenges include keeping data fresh and handling memory limits. These issues can hurt app performance and user experience.